In April of this year (2008), at the 17th International World Wide Web Conference in Beijing, China, Google researchers presented their findings on an experiment that they performed involving a new way of indexing images which relied to some degree on the actual content of the images instead of things such as text and meta data associated with those pictures.

Our First Look at VisualRank

The paper, PageRank for Product Image Search (pdf), details the results of a series of experiments involving the retrieval of images in for 2000 of the most popular queries that Google receives for products, such as the iPod and Xbox. The authors of the paper tell us that user satisfaction and relevancy of results were significantly improved in comparison to results seen from Google’s image search.

News of this “PageRank for Pictures” or VisualRank spread quickly across many blogs including TechCrunch and Google Operating System, as well as media sources such as the New York Times and The Register from the UK.

The authors of that paper tell us that it makes three contributions to the indexing of pictures:

- We introduce a novel, simple, algorithm to rank images based on their visual similarities.

- We introduce a system to re-rank current Google image search results. In particular, we demonstrate that for a large collection of queries, reliable similarity scores among images can be derived from a comparison of their local descriptors.

- The scale of our experiment is the largest among the published works for content-based-image ranking of which we are aware. Basing our evaluation on the most commonly searched for object categories, we significantly improve image search results for queries that are of the most interest to a large set of people.

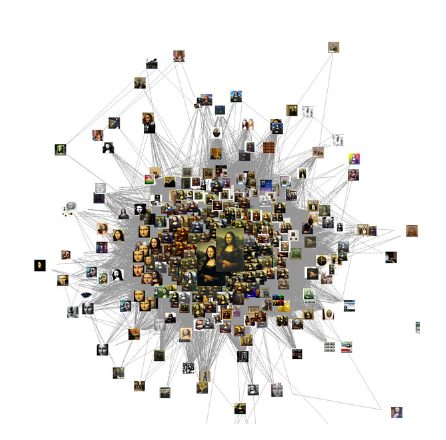

The process behind ranking images based upon visual similarities between them takes into account small features within the images, while adjusting for such things as differences in scale, rotation, perspective and lighting. The paper shows an illustration of 1,000 pictures of the painting the Mona Lisa, with the two largest at the center of the illustration being the highest ranked images in a query for “mona lisa”

A Second Look at VisualRank

In the conclusion to PageRank for Product Image Search, the authors noted some areas that they needed to explore further, such as how effective their system might work in real world circumstances on the Web, where mislabeled spam images might appear, as well as many duplicate and near duplicate versions of images.

A new paper from the authors takes a deeper look at the algorithms behind VisualRank, and provides some answers to the problems of spam and duplicate images – VisualRank: Applying PageRank to Large-Scale Image Search (pdf).

The new VisualRank paper also expands upon the experimentation described in the first paper, which focused upon queries for images of products, to include queries for 80 common landmarks such as the Eiffel Tower, Big Ben, and the Lincoln Memorial.

This VisualRank approach appears to still rely initially upon older methods of ranking images which look at things such as text and meta data (like alt text) associated with those images, to come up with a limited number of images to compare with each other. Once it receives those pictures in response to a query, a reranking of those images take place based upon shared features and similarities between the images.

Conclusion

Hopefully, if you have a website where you include images to help visitors experience what your pages are about in a visual manner, you’re now asking yourself how good a representation your picture is of what your page is about.

Being found for images on the web is another way that people can find your pages. And, the possibility that a search engine might include a picture from your page in search results next to your page title and description and URL is a very real one – Google has been doing it for News searches for a while.