By Bill Slawski

There’s an old saying that goes, “A picture’s worth a thousand words.” The right image on a web page can communicate ideas that words may only begin to capture.

An image in a news article may transport a viewer into the middle of the story. A couple of sharp images, from different angles, may inspire someone to buy something online that they might have only purchased offline previously, like shoes or clothes. A portrait of a writer or a business owner or a researcher may bring an increased level of credibility and trust to a web site.

Search Engines and Images

All of the major search engines allow us to search for images in image search web databases. The search engines have also started blending images into their regular Web search results, to add color and diversity to search results, as well as providing a possible way of illustrating different concepts that might be related to a query term with those pictures.

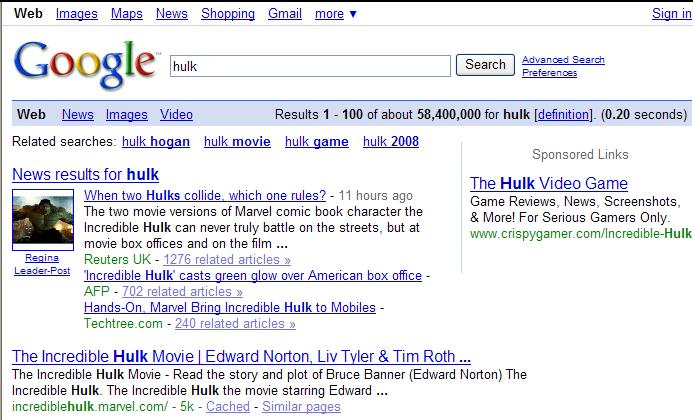

A picture next to a news result may provide context for the news story very quickly, like in the Google search result below:

While search engines index pages and pictures and videos and a host of other objects that they find on the web, their approach to helping us find images has relied upon text, and upon matching keywords that we enter into a search box. A search engine normally indexes images based upon words that appear on the same pages as pictures, in alternative text associated with the images, or in captions for the pictures, or in text that appears in the address, or URL, for the page, or in the words within links to the photo or page where that picture appears.

That reliance upon the words associated with images to index and rank pictures may be changing. Google recently released a paper about PageRank for Product Image Search that looks at similarities within the images themselves to rank pictures in a search. Microsoft just published a patent application on ranking images that looked at nontextual signals about images, such as the number of links pointing to the pictures, how frequently a picture appeared upon a site, sizes and the quality of the pictures, to help rank those images.

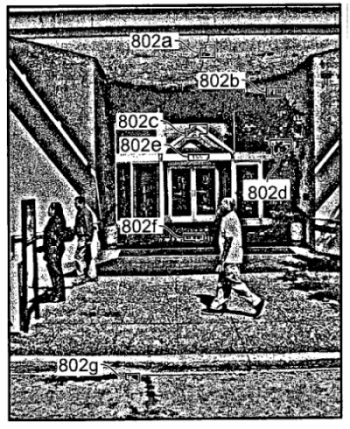

A Google patent application from January described ways that a search engine might read text in images, including the words and signs it sees while collecting pictures for its Street Views project for Google Maps. The picture to the right shows the locations of text in a Street Views image that Google could use in its index.

Search engines are getting smarter about how they view, index, and rank images and site owners should probably consider getting smarter about the images that they use on their pages to illustrate what they have to offer.

Making Room for Images in Search

What if we could send a picture to a search engine, and have it return related pictures back, or news stories, or web pages? In an article on the New York Times a couple of years back, The Route From Research to Start-Up, the founder of Nevenengineering described one of the technologies that he was working upon:

Ultimately, the technology “will allow you to point your camera phone at a movie poster or a restaurant and get an immediate review of the film or the fare on your cellphone, which will tap into databases,” said Mr. Neven, who foresees one billion camera phones in use worldwide by 2010.

Imagine snapping a photo, and having a search engine provide you with information about the subject of that picture.

Google acquired Mr. Neven’s startup a couple of years ago, and in the Official Google blog, they told us that one use of the technologies transferred in the acquisition would be A better way to organize photos?

Having software that could look at your photo collection, and index and organize your images based upon what it sees in the pictures themselves is pretty amazing.

But the image recognition technology from Nevenengineering could do more than sort photos. It could also be used to search for information related to images.

And before the company developed a consumer related product, it started out as a biometrics company, providing technology for law enforcement and the military. A presentation on one of their technologies, SIMBA: Single Image Multi-Biometric Analysis (pdf), provides an idea of some of what the company has been capable of when it comes to recognizing faces and associating them with people. And the technology is capable of performing facial recognition in videos as well as still images.

Faces First, Other Image Features Later?

Google doesn’t offer the ability to search based upon images that you upload to the search engine. At least, they don’t yet. But, it appears that they may have a start on technology that could make the possibility into a reality at some point.

Last year, a post on the Google Operating System blog pointed out a way to Restrict Google Image Results to Faces, News by adding a string of text at the end of the addresses, or URLs, for each of those types of searches.

A patent application published by Google recently described how the search engine can take facial images that it has associated with specific peoples’ names that contain metadata about the identify of those people, and use those pictures to build a statistical model of their faces.

That statistical model could then be used to associate the peoples’ names with other images that don’t contain metadata such as alternative text in alt tags, or captions, or text upon the same pages. The patent application is:

Identifying Images Using Face Recognition

Invented by Jay Yagnik

Assigned to Google

US Patent Application 20080130960

Published June 5, 2008

Filed December 1, 2006

Abstract

A method includes identifying a named entity, retrieving images associated with the named entity, and using a face detection algorithm to perform face detection on the retrieved images to detect faces in the retrieved images. At least one representative face image from the retrieved images is identified, and the representative face image is used to identify one or more additional images representing the at least one named entity.

It makes sense for Google to try to focus upon faces first, before tackling other aspects of indexing images based upon the content of those pictures. If Google can master the indexing of images that it finds upon the Web that don’t have text or metadata associated with them, that may bring the search engine a step closer to being able to provide search results for images uploaded to Google by a searcher.

Breaking the problem of indexing and searching images to one aspect of images, such as facial recognition, could allow the search engine to address image searching in incremental steps. Choosing facial images as a first step in developing a smarter image search technology does have some issues associated with it, especially from a privacy stance. Allowing people to upload images of faces, to search upon those may raise a number of privacy issues that a search engine may not want to address.

Meanwhile, Yahoo Looks at Landmarks

Another approach to indexing and ranking images is going on at Yahoo, in a Flickr related project that takes images that have been tagged with geographic terms and locations, and tries to cluster together images that are similar based upon locations identified in those tags. The tags associated with images include both user created annotations, and automatic annotations from “location-aware cameraphones and GPS integrated cameras.”

Using automatically generated location data, and software that can cluster together similar images to learn about images again goes beyond just looking at the words associated with pictures to learn what they are about.

The narrow focus of this project again allows for the development of a smarter image search technology in an incremental approach – associated with well known locations. It’s possible that this choice of topics won’t raise the number of privacy concerns that Google’s focus upon faces may.

Conclusion

Approaches from search engines to indexing and ranking images may soon be incorporating technologies that move them away from a strict reliance upon text that appears on the same pages as the pictures, if they aren’t already.

Images are being shown in Web search results in increasing numbers, so changes like this happening in an emerging area of search should be something to keep a careful eye upon.

Images on a web site can help illustrate the ideas and concepts on web pages in a way that words alone can’t. If the pictures can capture the essence of a concept or query through the use of text associated with the pictures on those pages, and even in the absence of such text, they may start appearing in blended search results at one of the major search engines.

Using facial recognition technology, or clustering images around landmarks based upon geographical tags and similarities in pictures are just two steps towards the development of image search technology on the web that relies less upon words, and more upon what is captured in those images.

The right picture on a web page may become not only a way to illustrate the ideas being presented on that page, but also a way for people to find that page based upon the content of the image rather than just the words that surround it.